Happy Earth Day 2024.

Ask people worldwide “is climate change a problem?” and the answer is usually a clear “yes.” But ask a follow-up, “what is the real nature of that problem?” and the picture is murkier. Answers might range from “we’re burning too much fossil fuel,” to “there are simply too many people,” to “global warming is destroying crops,” to “rainfall patterns are shifting – look at the record floods in the UAE and drought and wildfire in Hawaii,” to “we’re too consumer-oriented,” to “it’s the mis-directed policies of those rascals in that other political party.” The causes identified and the villains vilified would vary from country to country, from demographic to demographic – and from person to person. Eight billion people see the problem eight billion different ways – not just with respect to its seriousness, but with respect to its inherent features and its origins.

Whew! The good news is that the population includes professionals who make their living in the study and practice of (big-picture) problem formulation in general.

The previous LOTRW post looked at the thoughts of one such expert, Oguz Acar, on the particular challenge of harnessing artificial intelligence to problem solving. Along the way, he observed that “…to identify, analyze, and delineate problems… necessitates a comprehensive understanding of the problem domain and ability to distill real-world issues.”

Mr. Acar went on to identify four key elements to the process. His description of these is best read verbatim, but when condensed a bit, as, they boil down to something like:

Problem diagnosis – identifying the core problem to be solved. Typically, this involves looking deeper than the mere symptoms to discern the underlying problems.

Deconstruction – breaking down complex problems into simpler subproblems.

Reframing – changing the perspective from which the problem is viewed.

Constraint design – bounding the problem.

Hmm. Is analysis of this type being applied to the challenge of climate change? What fruit does it yield?

Start with the problem diagnosis. Eunice Foote got the ball rolling. In the mid-nineteenth century she concluded that water vapor and CO2 play a role in heating the atmosphere. Tyndall, Arrhenius, and others who followed refined the picture. Decades of measurement since document growth trends in atmospheric concentrations of greenhouse gases. Models of increasing diagnostic power predict the atmospheric temperature changes likely to result over the coming century or two based on different scenarios for future fossil-fuel use, the transition from fossil fuels to renewable energy sources, etc. Those models also reveal the changes in the hydrologic cycle likely to accompany the temperature changes. Other models reveal accompanying sea level rise and changes in ocean acidification. Research in all these areas and more is ongoing, and the findings documented every four years in voluminous United Nations IPCC climate change assessments.

The problem has also been reframed. It is today often seen as one wedge of global change – reflecting corresponding declines in natural habitat, biomass and biodiversity, and environmental quality, for example.

In past decades the problem has also been reframed more radically – in terms of human choice. In such a social-science perspective the underlying problems look quite different. Here is an example of ten recommendations for policymakers:

1. View the issue of climate change holistically, not just as the problem of emissions reductions.

2. Recognize that, for climate policymaking, institutional limits to global sustainability are at least as important as environmental limits.

3. Prepare for the likelihood that social, economic, and technological change will be more rapid and have greater direct impacts on human populations than climate change.

4. Recognize the limits of rational planning.

5. Employ the full range of analytical perspectives and decision aids from natural and social sciences and the humanities in climate change policymaking.

6. Design policy instruments for real world conditions rather than try to make the world conform to a particular policy model.

7. Incorporate climate change into other more immediate issues, such as employment, defense, economic development, and public health.

8. Take a regional and local approach to climate policymaking and implementation.

9. Direct resources into identifying vulnerability and promoting resilience, especially where the impacts will be largest.

10. Use a pluralistic approach to decision-making.

The authors contributing to this framing recognized that the social challenges here belong to a class of so-called wicked problems. And that in turn has prompted others to see the deeper problems underlying climate change as not merely societal, but stemming from beliefs, values and attitudes, even bordering on the spiritual.

Now we’re getting warm.

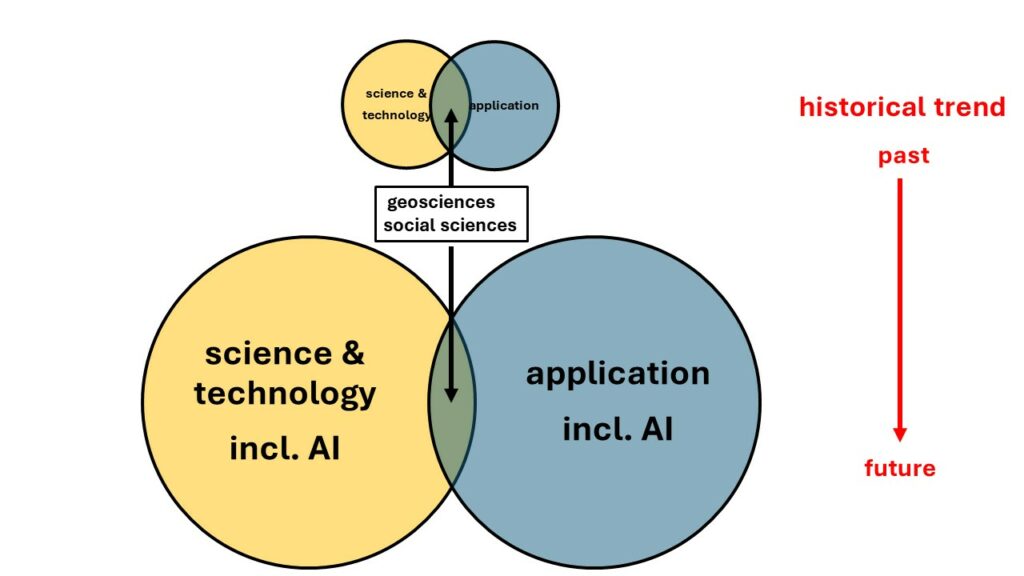

More about this in a moment, but let’s first turn our attention to the two remaining elements of problem formulation. Unsurprisingly, it turns out that the vast scale, the stupefying complexity and multi-faceted nature of climate change finds it matched by countless deconstructions and constraint designs (these two go hand in hand). Early on, the physical problem was split into mitigation (reduction of fossil fuel use and transition to renewable forms of energy) and adaptation (building resilience to the coming changes in the hydrologic cycle, patterns and severity of extremes of heat and cold, flood, and drought that are now inevitable as the result of the greenhouse gas buildup to date). The natural and social framings of the problem are spawning as many deconstructions as there are national, regional, and local political and demographic boundaries worldwide. Deconstructions have also emerged along lines of individual professional and academic disciplines, or to reflect contributions needed from myriad communities of practice.

This gives the appearance of chaos. Pessimists might accurately note that there are overlaps, gaps, and resulting inefficiencies in all this that we can ill afford in terms of the overall cost and the slow rate of progress relative to the urgency of the problem. But such multiple, trial-and-error approaches are far more rapidly distinguishing profitable paths forward from dead ends than any kind of monolithic, top-down approach ever would. Think about it. Climate change is not slow-onset; it’s rapid onset compared with the time required for eight billion people to agree on what we should do. This multiplicity of efforts also has the merit of transforming the problem from one to be solved by a small minority of the population, surrounded by eight billion critics, to a problem being attacked by eight billion participants. We all have skin in the game; it turns out that we all have talents and skills and perspectives to offer as well. Earth is no place for spectators.

Pessimists might also note that whatever the problem reframing, the cost is stupefying. And we’re failing to pay the bill. According to a recent New York Times article:

Experts estimate that at least $1 trillion a year is needed to help developing countries adapt to hotter temperatures and rising seas, build out clean energy projects and cope with climate disasters.

Fact is, that sum pales beside the $100 trillion thought to be needed for food, water, and energy infrastructure investment and renovation over the next two decades. But this mountain of money is money we’re paying ourselves. Bottom line? All of us, in one way or another, intentionally or unconsciously, are formulating the climate change/global change problem – diagnosing it, deconstructing it, reframing it, constraining it. And even as we continually hone all that, we’re moving from problem definition into action. We’re putting people to work, locally, everywhere, re-greening our planet – and maybe correcting some long-standing inequities, building a more unified, peaceful global society – in the process.

So happy Earth Day! This is how it feels when things are going well.